AI Accelerators (Nvidia and AMD GPU, hyperscalers’ in-house chips) receive a lot of attention. AI rack assemblers, the companies that turn a GPU into a system are equally interesting – and concentrated.

They are mostly Taiwanese firms: Hon Hai, Quanta, Wistron, Wiwynn and module makers like Accton. Growth of the top suppliers is impressive, stocks aren’t expensive.

They serve 2 different markets: hyperscalers customized racks, and Nvidia standard products. While we spend a lot of time on hyperscalers’ capex, it is possible that standard racks that go into AI data centers or “AI factory” will start outgrowing hyperscalers’ spending.

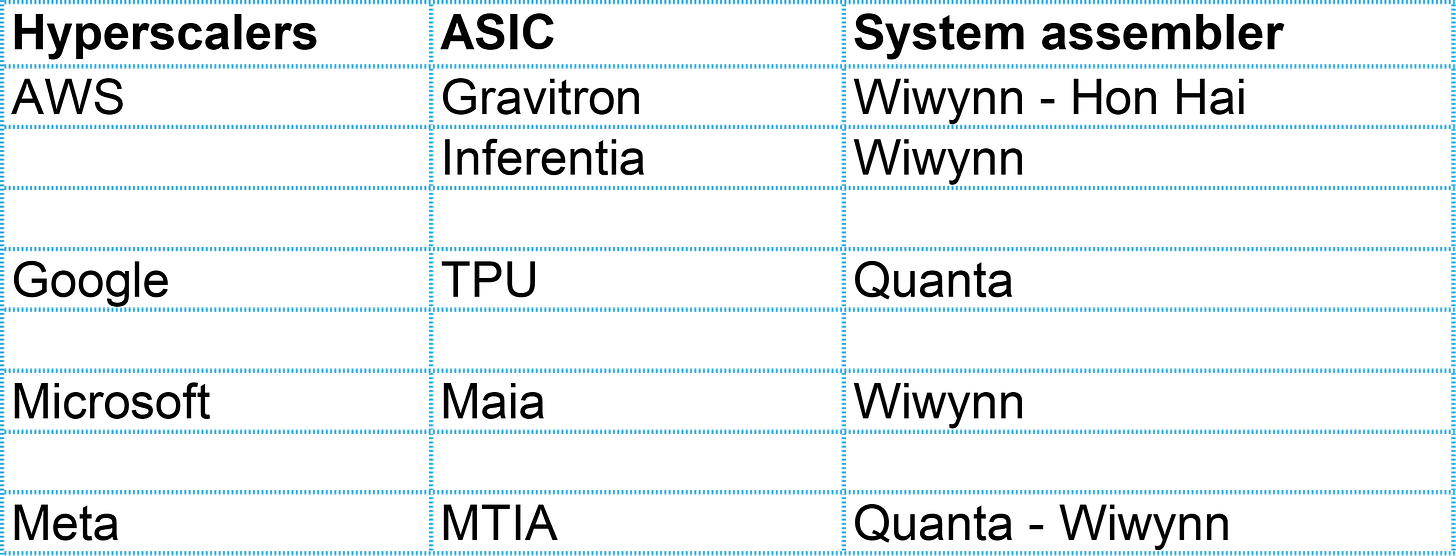

AI Accelerators racks system assemblers

We talk a lot about Nvidia and TSMC revenues, their complex roadmap, the competition from the hyperscalers’ in-house ASICs, the ASIC design firms (Broadcom, Marvel). There is an equally concentrated industry: the big rack designers and assemblers, the companies that turn a GPU into a system.

The systems (picture above is from Nvidia Jensen Huang keynote at Computex 2025) bear the name of their end-user: Microsoft, AWS, Meta, Google. But the hyperscalers do not design, let alone manufacture these racks. Even Dell don’t make anything. They outsource to system assemblers that:

1) have been making servers and racks for at least 20 years

2) are almost all Taiwanese

3) master a long and diverse range of weird skills, all based in the physical world: when there’s miles of cables in a rack, how to make sure that all data moves at the same speed? when there’s 150,000 Watt in a rack how to deal with heat dissipation, cooling, vibrations – these racks are the size of your fridge but your fridge burns 500 Watt.

The major system assemblers

Nvidia gives a list of its Cloud and Data Center partners. There are 16 firms in this picture, but actually here are the 5 big ones.

Hon Hai a.k.a. Foxconn (not in the picture, reason explained below) ticker 2317 TW

QCT Quanta Cloud technology, unlisted subsidiary of Quanta Computer ticker 2382 TW. Both QCT and Quanta make server racks for Nvidia – but not the same racks, see below.

Supermicro, the only US firm in this list ticker SMCI US

Wistron ticker 3231 TW

Wiwynn, is a subsidiary of Wistron which is consolidated in Wistron’s financials but is also listed stand-alone ticker 6669 TW

To these big 5 system makers, we should add 2 important subcontractors that make switch modules (data transmission modules):

The reason why Hon Hai a.k.a. Foxconn is not in the picture above is because this picture shows the manufacturing partners for Nvidia’s modular systems called MGX. This is the architecture that hyperscalers use to customize GPU / CPU (x86, Nvidia, other ARM), networking (Nvidia or ethernet), memory / storage.

If you’re not an hyperscaler, you don’t have the need and the engineering to customize your infrastructure. If you’re an Enterprise user or a smaller data center, you’ll buy an Nvidia “standard DGX rack” like GB200 NVL72 and these are made by the 5 firms above, including Hon Hai, plus GigaByte, Inventec, MSI, Pegatron.

Two different end-markets

We have 2 big markets here:

1. The hyperscaler market which is composed of 2 things:

a. Nvidia GPU racks made by QCT, Quanta Computer, Supermicro, Wistron, Wiwynn. Nvidia qualifies the firms above, but Nvidia does not decide who makes what, the hyperscalers are the customers.

b. In-house ASICs racks, made by: Wiwynn is the dominant supplier, followed by Quanta. Here again, the hyperscalers are the customers.

2. The standard Nvidia DGX racks for Enterprise and smaller Data Centers.

Hon Hai is the main supplier for NVL 72 servers. Quanta is the main assembler for NVL 36. Wistron, Inventec, GIGABYTE, ASUS make base configuration models, without any customization.

Side note. It becomes very difficult to track and reconcile revenues along the supply chain. The reason is that an Nvidia GPU (the chip alone) costs US$30k, a simple H100 server costs $400k, a big GB200 NLV72 rack costs $5m.

We might be sure that in 2025, Nvidia will sell 5 million AI GPU, or that AWS will buy 1m AI accelerators (Trainium) and 3m CPU (Gravitron). That’s cool. But Nvidia does not only sell chips, it also sells systems - and I do not think that anyone knows the mix. Similarly, we do not know the configuration of AWS’s racks. In other words, from chip revenues (TSMC) to system revenues (the rack assemblers), we don’t have enough visibility.

Nvidia’s big plan to cover the world with 1 “planetary-scale AI factory”

In May 2025, around Computex Taiwan, Nvidia released a lot of news and this one did not capture much attention: NVIDIA Announces DGX Cloud Lepton to Connect Developers to NVIDIA’s Global Compute Ecosystem

Here is what Nvidia is doing:

Nvidia is supporting or has invested in a large number of new data center operators: CoreWeave, Crusoe, Firmus, Foxconn, GMI Cloud, Lambda, Nebius Nscale, SoftBank, Yotta Data Services. Yes, Foxconn (Hon Hai) is becoming a data center operator in Taiwan.

On top of these numerous data centers that only provide Nvidia AI computing, NVIDIA is adding a management layer:

“DGX Cloud Lepton is an AI platform with a compute marketplace that connects the world’s developers building agentic and physical AI applications with tens of thousands of GPUs, available from a global network of cloud providers”.

Nvidia is connecting all these data centers to form a “DGX Cloud Lepton marketplace”

“Developers can tap into GPU compute capacity in specific regions for both on-demand and long-term computing, supporting strategic and sovereign AI operational requirements. Leading cloud service providers and GPU marketplaces are expected to also participate in the DGX Cloud Lepton marketplace”

This is Jensen Huang’s vision: “we’re building a planetary-scale AI factory”.

Planetary-scale. Nothing less.

What this implies is that we’re all spending a lot of time on hyperscalers’ capex, or the 1st market that I described above. But Nvidia’s own Lepton Cloud will be based on the 2nd market described above: standard DGX boxes. Let’s see which market grows faster.

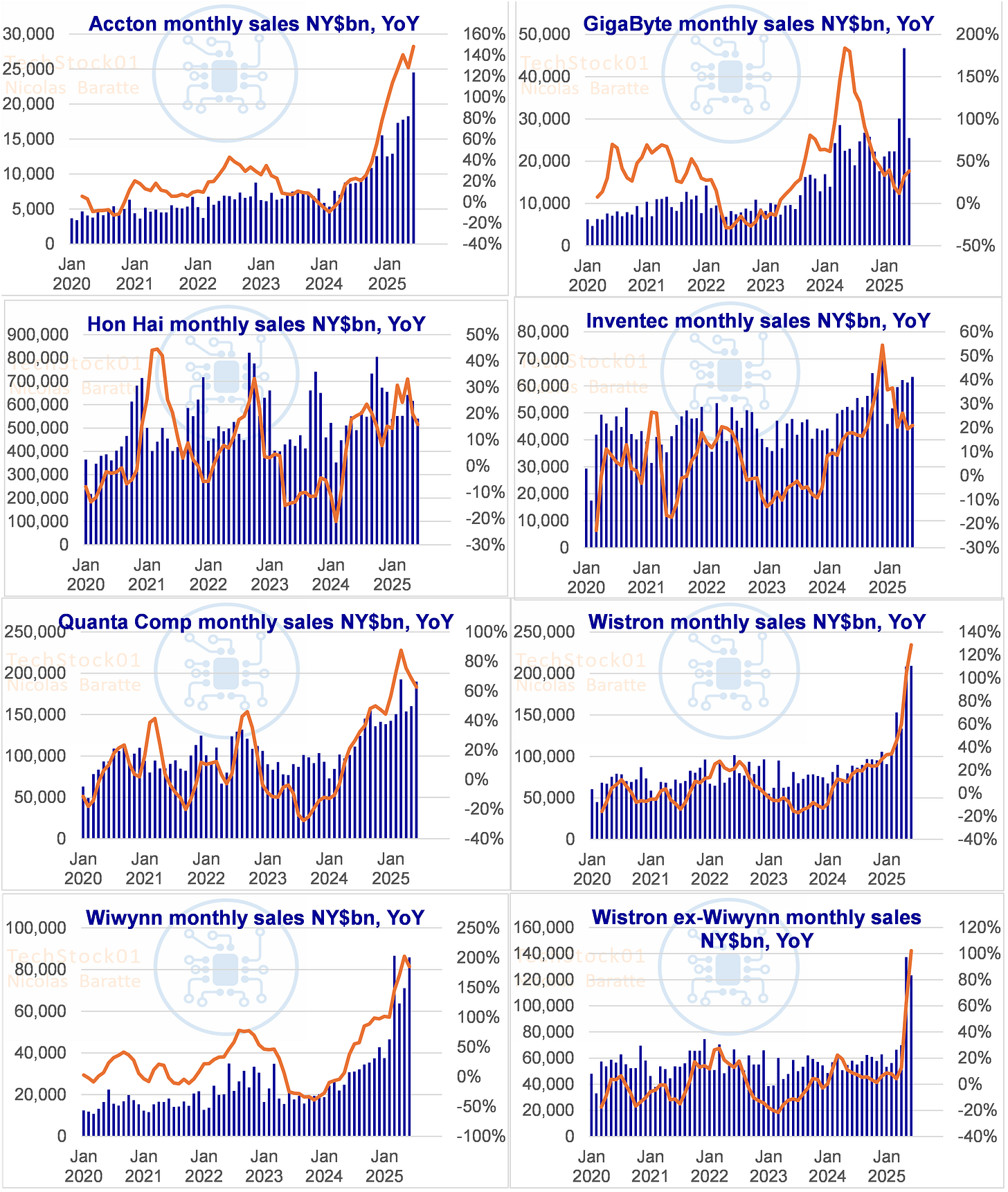

Taiwan system makers revenues

Taiwanese firms have to report monthly revenues. I have compiled below the revenues of the firms mentioned in this report, the important firms in the supply chain. As you can see below, firms are not equally benefiting from the AI investment wave. Some see modest growth, some deliver explosive growth. Ranked by year-to-date revenue growth:

Valuations

Consensus expects a large growth slowdown in 2026-27, and without a doubt, in my opinion, that’s wrong. It’s a typical sell-side analyst problem, there’s no incentive to forecast big growth that’s hard to justify (ie what’s cloud capex in 2027?), and easier to upgrade quarter after quarter.